Introduction

Every interaction in healthcare has the potential to shape both patient outcomes and professional relationships. For Certified Registered Nurse Anesthetists (CRNA), the ability to communicate with confidence and empathy is essential to navigating complex, hierarchical teams while caring for patients in their most vulnerable state. Nurse anesthesia programs dedicate extensive time to technical expertise, but leadership communication training is often limited, leaving students with few opportunities to rehearse and refine these skills before entering clinical practice. Addressing this gap requires training that is realistic, adaptable, and accessible to diverse learners.

Advances in educational technology facilitate new approaches to address these needs. Intelligent tutoring systems (ITS) have long supported personalized learning through rule-based logic and cognitive models, offering a scalable approach to skill development.3 Large language models now add generative and dialogic capabilities, accelerating the integration of artificial intelligence (AI) into education.3–5 When grounded in sound ITS design, these tools can create emotionally responsive agents that support realistic, adaptive communication training, an approach already leveraged in sectors such as business, aviation, and the military.6,7

Despite these capabilities, most AI applications for healthcare education target diagnostic reasoning or virtual patient encounters, with less attention to communication and leadership skill development.3,8 This lack of emphasis is especially consequential for CRNAs, whose role frequently necessitates high-stakes interdisciplinary conversations. When these skills are taught, practiced, and mastered, they have the power to directly impact patient safety, timely escalation during emergencies, and effective advocacy for resources across interprofessional teams.9,10 Prior research synthesized in a recent scoping review centered around AI-based communication training in healthcare shows that effective communication is associated with higher patient satisfaction.8 This scoping review found only a small number of AI-based systems for healthcare communication training, which were often narrowly focused and descriptive in nature.8 AI tools for teaching interprofessional communication remain rare, and many struggle with natural language generation, contextual responsiveness, and practical implementation demands.8,11–13 Together, these findings highlight both the promise and current shortcomings of AI-enhanced communication training.

To explore this potential, the authors developed and pilot-tested “Pat Maxwell,” a ChatGPT-42 powered (virtual hospital executive who simulates high-pressure leadership conversations. Built on ITS principles and emotionally adaptive design, the simulation responds in real time to the learner’s communication strategies.14 Unlike standardized patients, scripted simulations, or conventional AI chatbots, Pat adapts to the learner’s professionalism, empathy, and strategic clarity by adjusting tone, openness, and resistance accordingly.

This exploratory pilot study evaluated the implementation, acceptability, and early educational value of the AI-enhanced simulation within a doctoral nurse anesthesia leadership course. Specifically, we examined: (1) the feasibility of implementing AI-based communication simulation in a graduate curriculum; (2) student perceptions of realism, educational value, and relevance; (3) changes in self-reported confidence and preparedness; and (4) qualitative insights into how students applied structured communication strategies during the simulation. Findings from this pilot are intended to guide the development of future AI-enhanced simulations for leadership, advocacy, and communication training in nurse anesthesia education, while also informing broader applications across disciplines and adapting to varied learning styles.

Methods

Study Design

A mixed-methods pilot design was used, combining pre- and post-simulation surveys with thematic analysis of individual reflections and group debrief surveys. The approach emphasized pragmatic implementation and learner experience over experimental control, consistent with the study’s exploratory objectives.

Participants and Setting

Participants were first-year nurse anesthesia residents enrolled in a doctoral leadership course at a single U.S. university in Summer 2025. The research team obtained Institutional Review Board approval prior to data collection. Recruitment occurred through an in-class announcement, and consent was obtained electronically via a pre-simulation survey. Of the 99 enrolled students, 94 consented to participate and completed the simulation as well as both the pre- and post-simulation surveys. Although the simulation was a required component of the coursework, participation in the study was voluntary and did not affect students’ course grades. Data were collected only from students who provided informed consent. Students were informed they could withdraw from the study at any time without penalty. Data were de-identified and stored in accordance with institutional protocols.

Learners worked in self-selected teams of 4–8 individuals for the simulation and debriefing, consistent with the structure used throughout the course. Team composition was recorded during the consent process. Two teams with non-consenting members were excluded from qualitative analysis, and one learner was absent on the day of the simulation.

The final dataset included:

-

94 pre- and post-simulation surveys (unmatched)

-

78 individual reflections

-

12 group debrief surveys from fully consenting teams

Intervention

The simulation featured a 30-minute interaction with “Pat Maxwell,” a virtual hospital executive powered by ChatGPT-4. Integrated into the leadership course’s professional communication module, the experience was designed to move students beyond theoretical knowledge by providing deliberate practice in structured advocacy and communication techniques during a realistic leadership scenario.

Prior to the simulation, students received a one hour lecture on 8 evidence-based communication strategies relevant to clinical leadership and professional negotiation: Situation, Background, Assessment, Recommendation (SBAR); Describe, Express, Suggest, Consequences (DESC); Ask–Tell–Ask; Advocacy & Inquiry; Refocusing on Shared Goals; Framing; key Team Strategies and Tools to Enhance Performance and Patient Safety (TeamSTEPPS) principles; and the Seven Steps for Difficult Conversations (see Appendix A for brief definitions). These strategic frameworks have been shown to streamline information delivery, improve escalation of concerns, and increase mutual understanding.9,15–17 Several, such as TeamSTEPPS and SBAR, originated as U.S. military initiatives and were subsequently adapted for healthcare settings, where they have contributed to gains in teamwork and patient safety.10 All frameworks were intentionally selected by the research team for alignment with course and program objectives. They were introduced through a lecture with brief case discussions, laying the groundwork for applied use during the simulation.

The simulation scenario mirrored common professional challenges, including organizational resistance, hierarchical dynamics, and emotional complexity. Each team was tasked with responding to a fictional hospital announcement proposing significant changes to CRNA staffing, framed as a contract renegotiation scenario. All teams received an identical, standardized email prompt from Pat Maxwell and were instructed to treat the simulation as a formal stakeholder meeting.

Simulation Design

Pat Maxwell, the AI-powered hospital executive, was designed as a socially and emotionally responsive conversational agent. To support psychological fidelity, Pat’s persona was deliberately constrained to reflect a risk-averse, cost-conscious healthcare leader. Guardrails were embedded to prevent character drift and ensure consistent behavior throughout the simulation, with a concealed scoring rubric guiding these adaptive responses. This goal-directed persona design aligns with emerging literature on emotionally grounded, instructionally aligned agents.4

The simulation incorporated key constructs from ITS, including domain knowledge (what is being assessed), tutoring knowledge (how the system responds), and student modeling (how the system adapts to learner behavior). These principles were operationalized through a concealed scoring rubric that interpreted learner tone, strategy, and professionalism to adjust Pat’s stance, tone, and resistance throughout the simulation.3,4,6,7 The rubric directed when to reward effective strategies or introduce resistance and was designed to discourage vague statements, flattery, references to communication frameworks without application, and stalling tactics. This structure kept Pat’s responses both instructionally consistent and strategically challenging, prompting precise, purposeful communication from learners.14,18 Although the rubric itself was not analyzed due to limited external validation, it played a critical role in shaping conversation flow and reinforcing the simulation’s learning objectives.

Consistent with ITS best practices, the agent did not provide direct corrective feedback during the conversation, preserving dialogue flow and learner agency.3,4,14,18 However, participants could request tailored, strategy-based feedback at the conclusion by typing “evaluation,” which triggered a summary generated from the same concealed rubric that guided Pat’s responses.

The simulation was designed in alignment with the Healthcare Simulation Standards of Best Practice™ from the International Nursing Association for Clinical Simulation and Learning (INACSL), emphasizing psychological fidelity, outcome alignment, and realism.19 These standards informed scenario authenticity, learner-centered debriefing, and clearly defined objectives, ensuring that both AI design and educational intent were integrated from the outset.

Simulation Procedures

Pre-briefing and Instructional Preparation

Students participated in a standardized pre-briefing session that facilitated psychological safety, clarified procedures, and introduced the context of the simulation.19 Each team received: (1) the scenario prompt; (2) a summary handout of structured communication strategies; (3) a professional bio of Pat Maxwell; and (4) background materials outlining the fictional hospital and proposed staffing model. Clarifying questions were permitted, but no coaching or tactical guidance was provided.

Team Preparation

Teams had 20 minutes to develop a communication strategy using course materials and publicly available sources. Generative AI tools outside of the simulation parameters were explicitly prohibited.

Simulation Session

Each team engaged in a 30-minute live negotiation with the AI agent via the ChatGPT interface, conducted in an instructor-monitored classroom over secure university Wi-Fi. Teams engaged with the simulation until consensus was reached or the time limit expired. The session was designed to reflect the time-pressured, collaborative nature of real-world professional interactions.

Debriefing and Reflection

Following the simulation, each team submitted their transcript log and participated in a facilitated group debriefing session. These discussions focused on communication strategies, team dynamics, and interpretation of Pat’s responses, in alignment with INACSL’s best practices for reflective debriefing.19

Data Collection and Instruments

Both quantitative and qualitative data were collected from multiple sources to capture learner perceptions and communication behaviors and evaluate the simulation’s educational impact.

Pre- and Post-Simulation Surveys

All consenting participants completed anonymous electronic surveys. Four core 5-point Likert items were administered before and after the simulation to assess: (1) confidence initiating difficult conversations, (2) understanding of communication tools, (3) ability to balance empathy and assertiveness, and (4) preparedness to advocate professionally. Three additional post-only items measured realism, educational value, and likelihood of recommending the simulation. Surveys were anonymous and not individually matched.

Open-Ended Survey Items

The same post-simulation survey included 3 open-response prompts that asked students to describe: (1) the most significant communication challenges they encountered, (2) strategies used during the simulation, and (3) how the experience might influence their future practice.

Individual Written Reflections

As a required course activity, each student submitted a 100–200 word reflection after the simulation. Prompts emphasized team performance, the AI agent’s behavior, and lessons learned about leadership communication. Only reflections from consenting students on fully consenting teams were analyzed (n = 78).

Group Debrief Surveys

After facilitated debriefing sessions, teams submitted brief narrative surveys describing their communication approach and team strategy. Twelve group surveys from fully consenting teams were analyzed.

Simulation Transcripts and AI Scores

Teams submitted transcripts of their real-time AI interactions. These transcripts, along with the AI-generated rubric scores, were reviewed by the instructional team to verify simulation fidelity. Because the AI rubric lacked established validity, automated scores were excluded from research analysis and treated only as instructional artifacts.

Data Analysis

Quantitative Analysis

Data was exported from electronic surveys and analyzed in Jamovi (version 2.7.4).20 Descriptive statistics (means, standard deviations, medians, and ranges) were calculated to summarize each item and guide interpretation. Assumptions of normality and homogeneity of variance were assessed prior to inferential testing. Shapiro–Wilk tests revealed non-normal distributions across all items (P < .001), and Levene’s test indicated unequal variances for 2 of the 4 pre- and post-simulation items. Accordingly, nonparametric methods were used throughout.

Because surveys were anonymous and lacked individual identifiers, pre- and post-simulation responses were treated as independent samples. Group comparisons were conducted using the Mann–Whitney U test. Effect sizes were calculated using rank biserial correlation, with significance defined as P < .05. All analyses were exploratory and not intended to support causal inference.

Qualitative Analysis

Qualitative data sources (open-ended survey items, individual reflections, group debrief surveys, and transcripts) were analyzed thematically using a hybrid deductive–inductive approach. The analysis explored how students described their use of communication strategies during the simulation. It followed principles of framework analysis, including matrix-based coding, operational definitions, and team-based consensus building.21

Coding Procedures

Theme categories were initially derived deductively from the structured communication strategies emphasized in the course. Additional patterns emerged through iterative review of the qualitative data and were refined collaboratively to ensure instructional relevance and clarity. The analysis prioritized observable behaviors over abstract impressions to support consistency across reflections and group responses.

Six behavior-based themes were finalized through iterative team review. These themes represented practical communication behaviors aligned with course objectives and served as the coding framework:

-

Tone Adjustment – modifying tone, affect, or delivery style during the conversation

-

Strategic Reframing – pivoting or rephrasing in response to the agent’s stance

-

Leadership Growth – describing insight, confidence, or development in leadership skills

-

Professionalism Under Pressure – maintaining professionalism during moments of challenge

-

Listening to Adapt – adjusting responses based on cues or feedback from the agent

-

Team Dynamics – describing collaborative planning, shared strategy, or role division

Each source was independently coded by 2 raters using a binary scheme (1 = present; 0 = absent), with coding guided by operational definitions and clarified presence criteria.

Reliability

Interrater agreement was assessed using percent agreement. Discrepancies were resolved through consensus discussion. Final theme frequencies were derived from the adjudicated dataset.

Findings

Quantitative Results

Pre/Post Comparison: Confidence and Preparedness

Analyses were based on survey responses from 94 consenting participants. Descriptive statistics are provided for interpretability, and group comparisons were analyzed using nonparametric tests. Post-simulation participants reported significantly higher scores on all 4 pre- and post-simulation items: confidence initiating difficult conversations, balancing assertiveness and empathy, preparedness to advocate professionally, and understanding structured communication tools (all P < .001; Table 1). Effect sizes ranged from small (r = 0.26) to moderate (r = 0.40), with the largest improvement observed in understanding structured communication tools.

Post-Simulation Evaluation: Realism and Educational Value

In addition to pre- and post-simulation comparisons, 3 post-only items assessed students’ perceptions of the simulation. Ratings were consistently high across all domains, with mean scores above 4.3 on the 5-point Likert scale (Table 1). Students indicated that the simulation was realistic, enhanced their understanding of evidence-based communication strategies, and was highly recommendable for future cohorts. These results suggest that the simulation was both educationally valuable and strongly endorsed for continued integration into the curriculum.

Qualitative Results

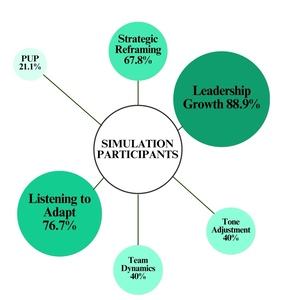

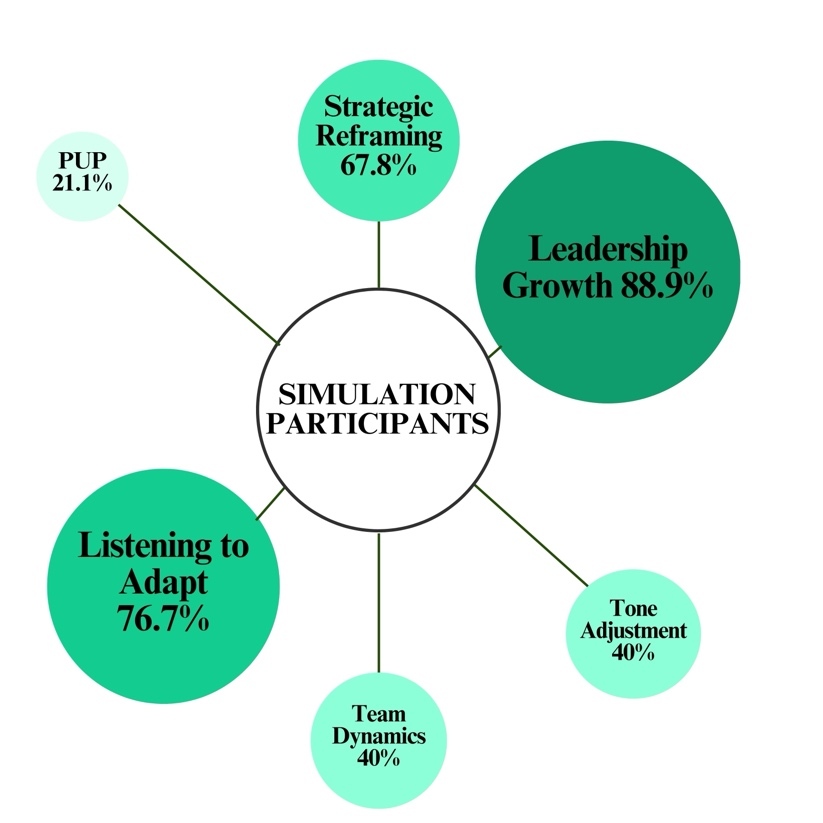

Ninety qualitative sources (78 individual reflections and 12 group debrief surveys) were analyzed using the 6 behavior-based themes. The analysis emphasized observable communication behaviors, adaptive decision-making, and reflections on leadership development. Patterns across responses revealed frequent use of strategic reframing, listening to adapt, and leadership growth. Students described modifying their approach in response to Pat’s resistance, cueing from tone or content, and developing confidence in navigating difficult conversations. While learners did not explicitly name communication frameworks, their language reflected practical uptake of strategies such as SBAR, DESC, and Ask–Tell–Ask.

Three themes emerged as most prevalent:

-

Leadership Growth (88.9%) captured student-reported increases in confidence, adaptability, and self-advocacy. One student wrote, “Sometimes, you have to be bold and stand up. They’re not always going to be kind.” Another noted, “I realized the importance of being clear, confident, and focused when speaking with people in authority.”

-

Listening to Adapt (76.7%) highlighted the importance of responding to Pat’s tone and cues: “We noticed it became apparent he did not want pleasantries but wanted blunt honesty.” Similarly, another explained, “We adapted to the answers he provided in order to best provide the answers he was looking for.”

-

Strategic Reframing (67.8%) described how students modified their language or rationale to align with institutional priorities such as safety, efficiency, and collaboration. One group described how they “were able to strategize differently in later responses, tailoring a solution for [Pat] rather than an argument against his ideas. After using this approach, he accepted [their] new model suggestion.”

In contrast, the remaining themes were observed less frequently but still provide important insight into how students managed delivery and group interaction.

-

Tone Adjustment and Team Dynamics each appeared in 40.0% of responses, suggesting moderate attention to delivery style and group strategy. One student noted, “Pat Maxwell caught me off guard when he did not even want to have any small talk from the beginning… this tells me he wants to go straight to the point,” illustrating how learners adjusted tone in response to perceived cues. At the team level, one group noted, “We went back and forth and ultimately had to compromise on what we were asking for so that we were all aligned in our response.” This highlights how collaborative adjustment shaped outcomes and reinforced the need for unified messaging under pressure.

-

Professionalism Under Pressure (21.1%) was the least frequent theme, highlighting instances in which students explicitly noted maintaining composure under tension. For example, one participant shared, “The AI negotiation taught me to stay calm and professional even when the conversation felt tense and intimidating.”

Overall, the simulation engaged students in the structured communication behaviors targeted by the instructional design. Patterns of adaptation, reframing, and growth highlight how participants navigated resistance and reflected on their development as emerging leaders. Table 2 and Figure 1 depict the frequency of theme presence across all qualitative sources.

Discussion

This exploratory pilot study demonstrates that an AI-enhanced simulation grounded in ITS principles can feasibly support structured communication training in nurse anesthesia education. Quantitative results showed statistically significant improvements in students’ self-reported confidence and preparedness, while qualitative analysis highlighted students’ strategic adaptation, reframing, and responsiveness to resistance. Recent literature suggests that generative AI enhancements to ITS, such as dynamic scenario generation and real-time adaptability, can further personalize learning experiences.4,5

Survey findings revealed small-to-moderate, yet educationally meaningful, effect sizes across 4 domains: initiating difficult conversations, balancing assertiveness with empathy, advocating for one’s role, and applying structured communication tools (P < .001; r = 0.262–0.402). Students also rated the simulation as realistic and relevant to their future practice, reinforcing its acceptability and perceived value within the graduate curriculum. These gains align with Council on Accreditation of Nurse Anesthesia Educational Programs (COA) graduate competencies in interprofessional communication and leadership, suggesting that such simulations can address both curriculum objectives and recognized gaps in applied communication practice.1

Thematic analysis of student reflections supported and expanded these outcomes. Rather than simply naming techniques, students described adjusting tone, modifying framing, and interpreting feedback embedded in the AI agent’s responses, without realizing their behavior was being scored. Such adaptability mirrors the dynamic decision-making required in clinical environments, where providers must pivot strategies in response to situational cues and interpersonal dynamics. The AI-generated rubric scores, although excluded from formal analysis, provided educational artifacts suggesting that the most effective teams demonstrated professionalism, flexibility, and alignment with shared goals. These behaviors elicited more collaborative responses from the AI-driven character, advancing negotiation and modeling professional strategies for overcoming hierarchy and building trust. Collectively, the findings indicate that the simulation fostered both knowledge application and real-time strategic reasoning, a core objective of ITS-based instruction.6,14

The simulation design deliberately preserved psychological safety, realism, and instructional alignment. These principles are essential for trustworthy, pedagogically sound AI agents.22 Learners were briefed that the exercise was a no-fault learning experience, and the AI was programmed to reward effective communication, thereby maintaining trust in the process. Notably, the AI functioned in dual roles: role-player and tutor. While students were unaware of its internal scoring, these assessments shaped the AI’s responses: challenging timid learners to be more assertive or encouraging tact when interactions became too forceful. In this way, the AI’s adaptive feedback provided embedded formative assessment, as learners indicated they could assess their progress from the agent’s responses. This approach reflects scaffolding principles in ITS design and demonstrates how generative AI can deliver real-time, context-sensitive coaching at scale.23

From an educational perspective, this work contributes to a growing body of evidence supporting simulation as a tool for developing non-technical skills such as leadership, communication, and professional advocacy.8 While many ITS applications remain concentrated in procedural domains like science, technology, engineering, and mathematics education, this study illustrates their relevance to emotionally nuanced communication competencies such as persuasion, strategic reframing, and interprofessional dialogue. By simulating realistic hierarchies and embedding feedback into the AI responses, the system offered learners a psychologically safe space to practice assertiveness, empathy, and adaptability. As generative AI becomes more accessible in education, its potential to scale personalized, context-rich learning experiences may extend well beyond healthcare. These observations underscore the value of immersive simulation for practicing higher-order communication skills.

Business and Leadership Implications

Although the immediate focus of this simulation was communication skill development, its design also speaks to the broader business and leadership responsibilities inherent in anesthesia practice. CRNAs serve as both clinicians and organizational leaders, navigating contract negotiations, resource allocation, and interdisciplinary advocacy. These non-clinical responsibilities directly shape workforce stability, perioperative efficiency, and ultimately the safety and accessibility of patient care.

By embedding structured negotiation and advocacy exercises into the simulation, learners engaged in scenarios that mirror real-world administrative and contractual dynamics. Practicing how to balance assertiveness with diplomacy in a psychologically safe environment equips trainees with transferable skills for interacting with hospital administrators, negotiating service agreements, and advocating for policies that support safe staffing and equitable access to anesthesia services. Importantly, these leadership skills extend beyond individual career advancement; they contribute to healthier labor–management relationships, reduced professional burnout, and more sustainable anesthesia delivery models.

For healthcare systems, fostering this dual competency of clinical excellence and effective negotiation and advocacy offers tangible benefits. Providers trained in structured communication are more likely to build collaborative partnerships with administrators, mitigate conflict during contract discussions, and contribute to resource stewardship. For patients, these dynamics translate into safer, more efficient perioperative environments, improved continuity of anesthesia services, and care models that are both high quality and cost-effective.

Framed in this way, the simulation not only advances educational objectives for individual learners but also supports the resilience and adaptability of healthcare systems. By preparing anesthesia practitioners to assume leadership roles in both clinical and business domains, AI-driven simulations may help bridge the gap between professional advocacy and patient-centered outcomes, aligning the interests of providers, institutions, and the populations they serve.

Implementation and Future Directions

From an implementation standpoint, the simulation was integrated into an existing leadership course with minimal disruption. Students required no additional training, and the AI interface was reported as intuitive, supporting its feasibility for broader integration across graduate programs. Future research should explore longitudinal outcomes, skill transfer to clinical practice, and expanded applications of AI-driven simulation in professional education.

Limitations

Several limitations should be acknowledged. Pre- and post-simulation survey responses were collected anonymously, which prevented matched-pair analysis and required the data to be treated as independent samples. This limited control for individual baseline differences and reduced statistical power, though the observed effect sizes still suggest a meaningful early impact. The study also employed a single-group pre/post design without a control or comparison group, making it difficult to establish causality; it remains unclear whether the observed changes were driven by the simulation itself or by other concurrent learning experiences. To protect participant anonymity, demographic data (such as age or gender) was not collected. As a result, potential differences in outcomes or thematic patterns across demographic groups could not be examined. Additionally, outcomes reflected short-term perceptions measured immediately after the simulation, leaving questions about long-term retention and the transfer of skills to clinical practice.

All measures were self-reported, which, while demonstrating high internal consistency, are vulnerable to social desirability bias and cannot capture actual behavior change. The study was conducted with a single cohort of nurse anesthesia residents at one academic institution using a custom-developed AI simulation, which limits generalizability to other learner populations, contexts, or simulation platforms. Although the simulation produced formative feedback using an internal rubric, these automated scores were not externally validated and were therefore excluded from analysis; future research should evaluate the reliability and validity of AI-generated metrics alongside observer ratings or clinical assessments.

Conclusion

This pilot study demonstrates the feasibility and perceived educational value of AI-enhanced simulation for leadership communication training in nurse anesthesia education. Building on these results, future research should assess long-term skill retention and incorporate matched-pair designs to better capture individual growth. Comparative trials may help clarify the unique contributions of AI-based versus traditional simulation formats, while triangulated assessment methods, including observer ratings, AI-generated feedback, and learner reflection, could offer deeper insight into behavioral readiness.

By moving beyond procedural training, this approach strengthens communication, advocacy, adaptability, and leadership, which are identified in the COA’s graduate competencies as essential to safe practice and professional impact.1 AI-driven simulation also complements emerging modalities such as virtual reality, which provide scalable and immersive training opportunities. Together, these technologies can help students develop and apply critical competencies in personalized, context-rich environments that reflect the nuanced demands of modern healthcare.